March 2015

The Evolution of the FE

The Fundamentals of Engineering Exam is adapting to curricula changes and new technology while remaining a critical step in the licensure process.

BY EVA KAPLAN-LEISERSON

S ince it was first offered nationally in 1965, the FE exam has served as the second stop in the engineering licensure process, after earning an accredited degree. It remains the only national exam designed to test candidates on knowledge and skills gained in college engineering programs.

ince it was first offered nationally in 1965, the FE exam has served as the second stop in the engineering licensure process, after earning an accredited degree. It remains the only national exam designed to test candidates on knowledge and skills gained in college engineering programs.

Over the years, the exam has changed to better reflect academic curricula and industry needs, as well as to update testing practices. A little over a year ago, the exam transitioned to computer-based testing, which has increased flexibility for test-takers while deterring cheaters and improving security of exam packets. Concurrent adjustments to content have ensured the discipline-specific FEs are more closely aligned with curricula.

A Series of Changes

If you sat for the FE exam prior to 1996, you took the same exam as all of your fellow test-takers nationwide.

But that year, the National Council of Examiners for Engineering and Surveying (NCEES) added an afternoon portion focused on the disciplines: civil, mechanical, electrical, industrial, chemical, or general (later called “other disciplines”). Environmental engineering joined the list in 2002.

In this format, the morning included general engineering knowledge, such as math or ethics; the afternoon concentrated on specialized knowledge—for example, structural analysis for civil engineers.

Says NCEES Chief Operating Officer and NSPE member Davy McDowell, P.E., “the exam had always been really centered on the student who was in the senior year of the engineering program, so it seemed to be a natural fit to test them on things that they would experience in their entire career in school.”

Because the FE is a nationally normed exam, over the years institutions have been able to use exam results to assess the strengths and weaknesses of their programs, particularly for ABET accreditation (see sidebar). The move to a discipline-specific format also allowed schools to gain more information about their content beyond what was taught in freshman and sophomore years. The change was made with such assessment in mind, says McDowell. The council and its leadership “saw the exam could have a dual purpose.”

The latest pencil-and-paper version of the FE took eight hours and comprised 180 questions. It was offered twice a year, in October and April. Test takers would typically need to wait six to eight weeks for their results.

The Move to Computer

In the late 1990s, NCEES formed a task force to examine transitioning its exams to computer-based testing (CBT). But, according to McDowell, student focus groups highlighted the need to further grow awareness of engineering licensure and the FE exam before making major changes to the test.

The organization spent time talking to students about the importance of licensure and promoting the use of the FE exam as an ABET outcomes assessment tool. Concurrently, NCEES began to work on exam questions to ensure they would work for the eventual move to CBT.

From 2007 to 2013, NCEES grew the number of FE examinees from 48,000 to 56,000.

Finally, in 2010, NCEES voted at its annual meeting to transition to a computer-based format. Grant Crawford, P.E., professor of mechanical engineering at Quinnipiac University in Connecticut, served as vice chair of the FE exam committee from 2010–12 and chair from 2012–14. He acted as the “conduit” between council staff and the roughly 80 volunteers helping to convert the exam.

The process included steps such as:

-

Reviewing and changing the exam specifications for each discipline in response to a content review on academic practice and industry needs;

-

Examining about 40,000 questions to determine whether they fit within the new specifications;

-

Removing questions and writing new questions as needed, ensuring they were neither too easy nor too difficult;

-

Rewriting the provided reference manual to fit the new specifications;

-

Selecting a CBT vendor (Pearson VUE); and

-

Running exam simulations with Pearson to ensure a test-taker would get the same result with different exams (i.e., checking reliability).

Crawford calls the process “an amazingly incredible amount of work” but says at the same time it was a smooth and easy experience due to the volunteers who jumped in to help.

Content Changes

NCEES completes a content review for the FE every five to seven years, asking faculty what they expect students to learn while in school and working engineers what they expect students to know upon graduation.

The latest survey, conducted in 2011, drove some key changes as the exam moved to CBT. According to McDowell, although there were still some core topics that all engineers needed to know, there weren’t enough to comprise a separate section. NCEES decided to remove the morning portion and instead weave the common knowledge into the discipline-specific exams. McDowell explains that all the FE exams include topics such as calculus, engineering economics, and ethics.

NCEES also adjusted specifications, based on the review, to remove content no longer taught in particular disciplines. For instance, thermodynamics was removed from the electrical and industrial exams.

David Whitman, P.E., is a professor of engineering education at the University of Wyoming and past NCEES president who has spent more than a decade on the FE exam development committee. As he puts it, “Why should we be testing them on material that at best all they’re doing is taking a two-hour review course and maybe making a few educated guesses?”

“The exam is now a better reflection of the curriculum the faculty in the nation have created in the last 10 years,” he says.

Brian Van Nortwick, EIT, took the civil FE exam in April 2014 as a graduation requirement at the New Jersey Institute of Technology. Now a staff engineer at H2M architects + engineers, he says the new format enabled him to focus on his core areas of knowledge instead of having to devote time to subjects that weren’t in his degree program, such as chemistry.

With the new exam, NCEES was able to decrease the number of questions to 110 from 180. The test now includes 100 questions that count for scoring and 10 that don’t, but are being trialed for potential use.

The shortened exam also cut the time required: from eight hours for just the exam, not including lunch and orientation, to six hours, including tutorial and break.

And, with the move to CBT, the exam can occur more frequently. It’s now given continuously in testing windows, two months on and one month off, allowing time for republishing of question sets if needed.

Another difference: each examinee now gets a unique exam created “on-the fly” from the massive question bank.

NCEES Benefits

The change to computer-based testing has relieved longstanding security concerns.

McDowell explains that shipping exams to 250–300 sites across the country, and then back, was always a stressful endeavor.

If the exam booklets met with disaster—such as the time a substance spilled on them in shipment and the shipping company tossed them in a landfill—the organization would have to determine whether the questions needed to be tossed out for security purposes.

Developing new questions is expensive, as NCEES has to pay to bring volunteers from all over the country to council headquarters in Clemson, South Carolina, and provide them with food and lodging. So when an exam is compromised, “it’s a sad day for a number of reasons,” McDowell says, “dollar-wise as well as we lost very good questions that statistically did what we wanted them to do.”

Pearson testing centers are focused on security, he notes. For instance, a palm vein scan on check in ensures that a retaker is the same person and not an “evil twin,” says McDowell. A camera atop every carrel ensures that examinees aren’t cheating.

McDowell also points out that the move to testing centers ensures uniformity. Previously test takers might have been in an airplane hangar, a hotel ballroom, or an engineering building. “Moving to CBT allowed for those very uniform testing conditions so everybody is feeling the same way on exam day,” he says.

Examinee Benefits

For test-takers, the more flexible schedule offers advantages. Previously, student athletes or those who got sick or had family emergencies would have to wait six more months, says Crawford.

And the computer-based testing enables examinees to get results much faster, within seven to 10 days.

This is one of the changes on which NCEES has received the most positive feedback. The other is the searchable reference book included on the testing screen. Crawford says NCEES was worried that not having a paper reference book in front of students might be a hindrance. On the contrary, “the current generation of students embraced [the searchable electronic version].” He believes increases in pass rates may be attributable to candidates more easily finding information.

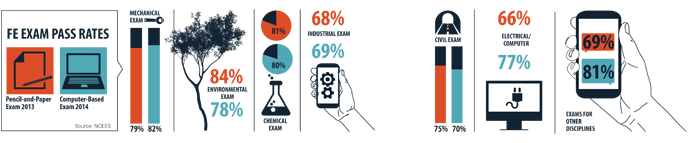

On the whole, pass rates for the new computer-based exams have been similar to those for the pencil-and-paper exams, McDowell notes.

Pitfalls and Challenges

There is one drawback to the move to computer-based testing that NCEES is hoping will be temporary: a rather significant drop in the number of examinees. McDowell attributes the 20% decrease to a “procrastination effect.” If there are only two dates to take the exam, he explains, candidates will jump to sign up so they don’t miss them. But with increased flexibility, candidates tend to put the test off.

The NCEES COO says the council had been warned about this effect by other groups that had undergone a similar transition. He hopes that the volume of test-takers will correct itself over time. The organization also may need to work harder to ensure students understand the value of the exam and of getting licensed, he says, in collaboration with engineering societies.

Those outside of NCEES point out two other potential pitfalls. One is price. The cost of the exam has increased from $125 to $225, which concerns NSPE members at one school. The previous and current heads of the chemical and materials engineering department at New Mexico State University, Martha Mitchell, P.E. and David Rockstraw, P.E., explain that the department can no longer require students to take the exam—nor pay for it as it used to—because of the increased cost.

The chair-elect of NSPE’s Professional Engineers in Higher Education interest group, Thomas Hulbert, P.E., highlights another challenge. He organizes and teaches FE review courses for the Massachusetts Society of Professional Engineers, but he struggles to put together a cost-effective course with the fully discipline-specific exams. “I’ve been working for almost two years to try to develop a course,” says the retired associate dean of engineering at Northeastern University.

Hulbert explains that while he could run separate prep courses for each exam, it’s tough to get a critical mass of students to support that. He is the contact person for review on MSPE’s website and receives probably two or three e-mails a month. “Each seems to be in a different discipline,” he says.

A Look Ahead

Still, McDowell says today’s FE exam isn’t greatly different from the exam of the past. “The fundamentals of engineering really haven’t changed a great deal since some really brilliant people from many years ago figured out gravity, speed, forces, [etc.],” he points out.

Opportunity does remain for future tweaks, such as a move to year-round testing, although McDowell says that won’t happen this year, as council policies would have to be changed. In addition, computer-based testing opens up the possibility for new types of questions with features such as video segments or drag and place.

“This is just the next evolution of testing,” says McDowell. “We went from the pencil-and-paper exam to a computer-based exam and then we are going to take the computer-based to a new level. There are some neat ideas and ingenious ways to test that we feel we will get into in the future.”

The FE for Outcomes Assessment

Since the 1990s, NCEES has provided accredited engineering programs with FE exam reports that summarize results by topic and offer national comparisons. Programs can use the information to assess student strengths and weaknesses and evaluate outcomes.

David Whitman, P.E., engineering education professor at the University of Wyoming, has coauthored NCEES whitepapers on outcomes assessment and conducts presentations on the topic. He explains that the reports are a great assessment tool because they offer apples-to-apples comparisons and “hard and fast numbers” that can show ABET why an institution is making changes.

The University of Wyoming is one of many schools that require students to take the FE, in order to gather the data, but not to pass it. The school has made changes to programs based on the reports—for instance, after a fairly significant drop in the scores of one subject over a year and a half, an instructor was returned to a course who had been removed. Scores went back up.

NSPE member Scott Sabol, P.E., a professor in the architectural and building engineering technology department at Vermont Tech, notes that his department uses results not just to determine what to change but also what not to change, based on what students do well in.

Brian Swenty, P.E., chair of the mechanical and civil engineering department at the University of Evansville in Indiana, explains that although his department also uses other assessment methods, the FE “is the key, the lynchpin. Without the FE subject scores and data, I don’t think the assessment program and processes would be nearly as good as they are.”

According to NCEES, the reports are increasingly being used for outcomes assessment. In a 2010 council survey of institutions, 80% of respondents indicated that at least one program was using it for the purpose (response rate was one-quarter).

Whitman believes the recent changes to the exam make it a stronger tool for assessment, because it is more focused. You no longer have “wasted questions” from a testing point of view, he says, questions on areas that programs don’t cover.

Visit http://ncees.org/licensure/educator-resources to learn more about using the FE exam as an outcomes assessment tool. NCEES’s August 2014 Licensure Exchange summarizes the ways the reports have changed with the new FE exam.

NSPE’s Professional Engineers in Higher Education interest group published its own whitepaper on using the FE for outcomes assessment, in the exam’s previous iteration. Find it at www.nspe.org/resources/interest-groups under “Higher Education.”

View larger: Download a PDF